AI isn’t the enemy or the answer. From synthetic artists to deepfake influencers, a middle path to the Authentic Individual shows how we can use technology without losing our humanity and why the future will belong to those who still sound real.

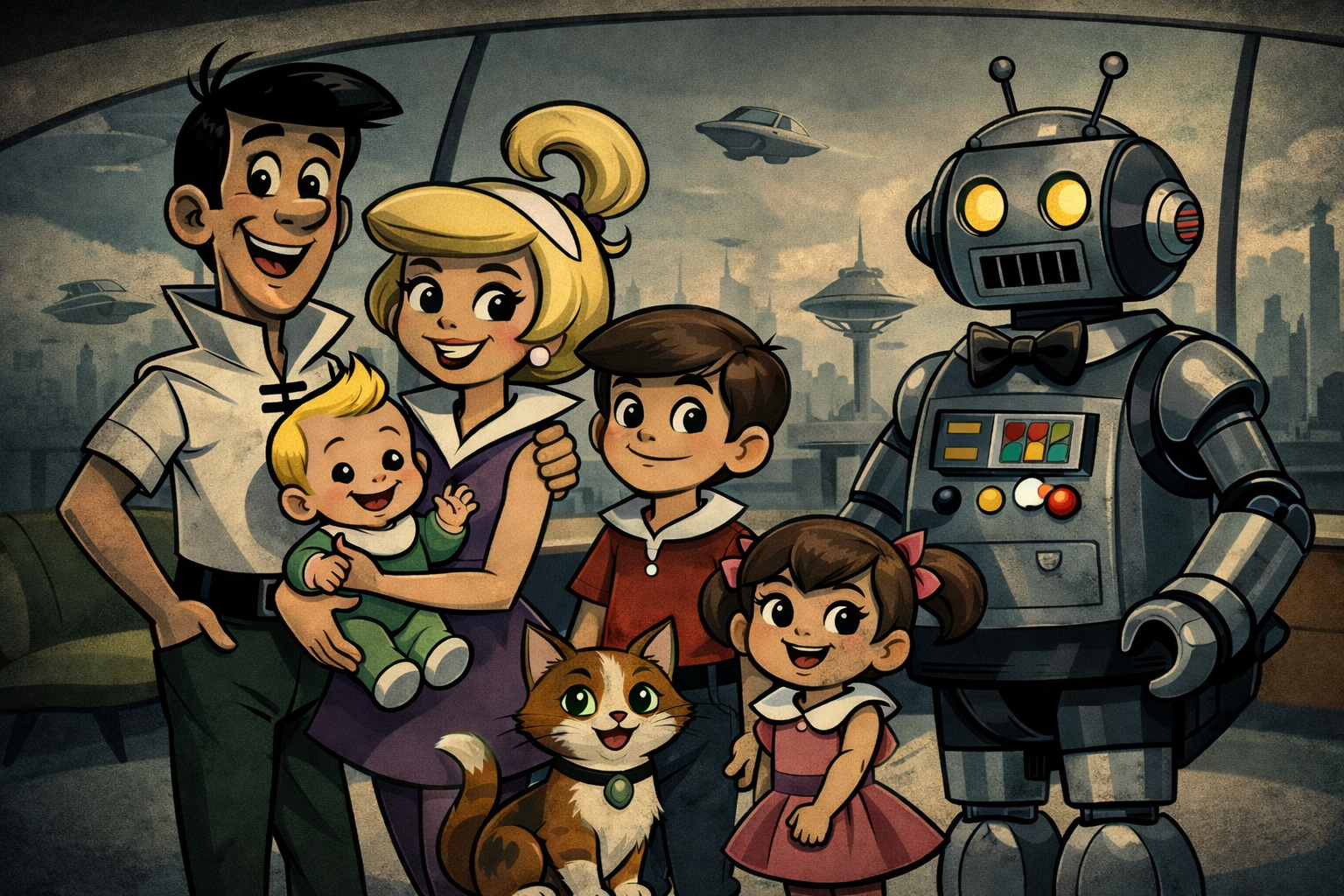

For years, we’ve talked about the future as if it would announce itself through spectacle. Through radical change. Through technologies so obvious we’d have no choice but to notice them. Hoverboards, robot servants, and flying cars are what Boomers and Gen X grew up believing was the future.

What arrived instead was quieter.

The future didn’t show up to transform us. It showed up to smooth behavior, reduce deviation, and keep things moving within a narrow band of what’s familiar, comfortable, and most important of all, what’s profitable.

Not extraordinary futures.

Predictive normalcy.

Prediction Was Always the Objective

This didn’t start with AI.

Long before algorithms, industries learned that human behavior is not nearly as chaotic as we like to believe. People repeat themselves. They cope in patterns. They seek relief the same way, at the same times, for the same reasons.

Marketers have always examined how systems organize around those repetitions. Food becomes comfort. Alcohol becomes release. Drugs become correction. Sex becomes validation. Each industry learns when demand peaks, what emotions trigger action, and how to position itself as the most efficient response.

Technology didn’t change that logic, it simply refined it.

Instead of relying on assumptions, systems could now observe behavior directly, continuously, and in context. Every interaction produced data and every pause revealed preference. Over time, the goal moved beyond influence and became anticipation.

Instead of relying alone on messaging, pressure, and aspiration, today’s systems work upstream of that process. They don’t argue with users; they position options so the most predictable choice feels like the easiest one.

Social media offered an early blueprint. Infinite scroll, variable rewards, and algorithmic timing weren’t about entertainment alone. They were about maintaining behavioral consistency. Engagement wasn’t the outcome, it was the mechanism that allowed platforms to learn when users were most likely to continue, react, or comply.

AI didn’t replace this model. It scaled it.

Comfort as a Regulating Force

Systems no longer wait for desire to be expressed. They infer it. From time of day, past behavior, or from micro-signals users don’t consciously register. Hunger, stress, boredom, loneliness—these states are anticipated, not addressed after the fact.

The most successful systems of the past decade didn’t promise transformation. They promised less disruption. Auto-play keeps content flowing. Auto-renew eliminates reconsideration. Auto-fill removes hesitation. Auto-suggestions quietly narrow the field of possibility. Friction isn’t eliminated because it’s painful, it’s eliminated because it introduces variability.

Discomfort still exists, but it’s managed quickly and quietly. Food arrives before hunger becomes urgency. Alcohol is framed as self-care before stress feels unmanageable. Pharmaceuticals are positioned as maintenance rather than intervention. Technology sits between all of it, coordinating responses and reinforcing routines.

Choice Doesn’t Disappear. It Becomes Irrelevant.

We once imagined a future where machines made decisions for us. What we’ve built instead is a system where decision-making feels inefficient.

Why pause when the system already knows what usually works?

Why reconsider when the default has been optimized?

Why disrupt momentum when continuity feels better?

Autonomy hasn’t been taken away, but it has been quietly deprioritized and over time, and choice has become something we exercise only when something goes wrong. Normal life runs on prediction.

The Role of the Individual

As explored in The New AI: Authentic Individual, automation doesn’t eliminate human value, it clarifies where it still exists. When systems generate content, recommendations, and responses at scale, what matters isn’t output. It’s judgment.

Taste. Discernment. The ability to recognize when “normal” is being engineered. But predictive systems don’t encourage that awareness. They reward smoothness, consistency, and compliance. Participation is easier than reflection. Normalcy is reinforced through convenience.

Resisting that pull doesn’t require rejecting technology. It requires noticing when optimization replaces intention.

The Cost of Predictive Normalcy

Predictive systems don’t announce themselves as constraints, they present as improvements like faster responses, better timing, or fewer interruptions. Life feels smoother, more efficient, and easier to navigate.

But predictability has a cost.

When systems learn what keeps us moving, they also learn what keeps us contained. Behavior that fits the model is reinforced. Behavior that doesn’t quietly fades out of view. Over time, the range of what feels normal narrows, not through force, but through repetition.

The future we’re living in isn’t dramatic or dystopian. It’s calibrated. Designed to keep us within a manageable band of habits, preferences, and responses. Not because anyone demanded it, but because it’s easier to serve, measure, and monetize what’s predictable.

The real question isn’t whether these systems are good or bad. It’s whether we notice when normal stops being something we choose and starts being something that’s chosen for us. And whether we remember how to tell the difference.