Predictive normalcy is shaping behavior quietly through defaults, convenience, and anticipation, making the future feel smoother, smaller, and harder to notice.

When everything feels fake, real becomes revolutionary.

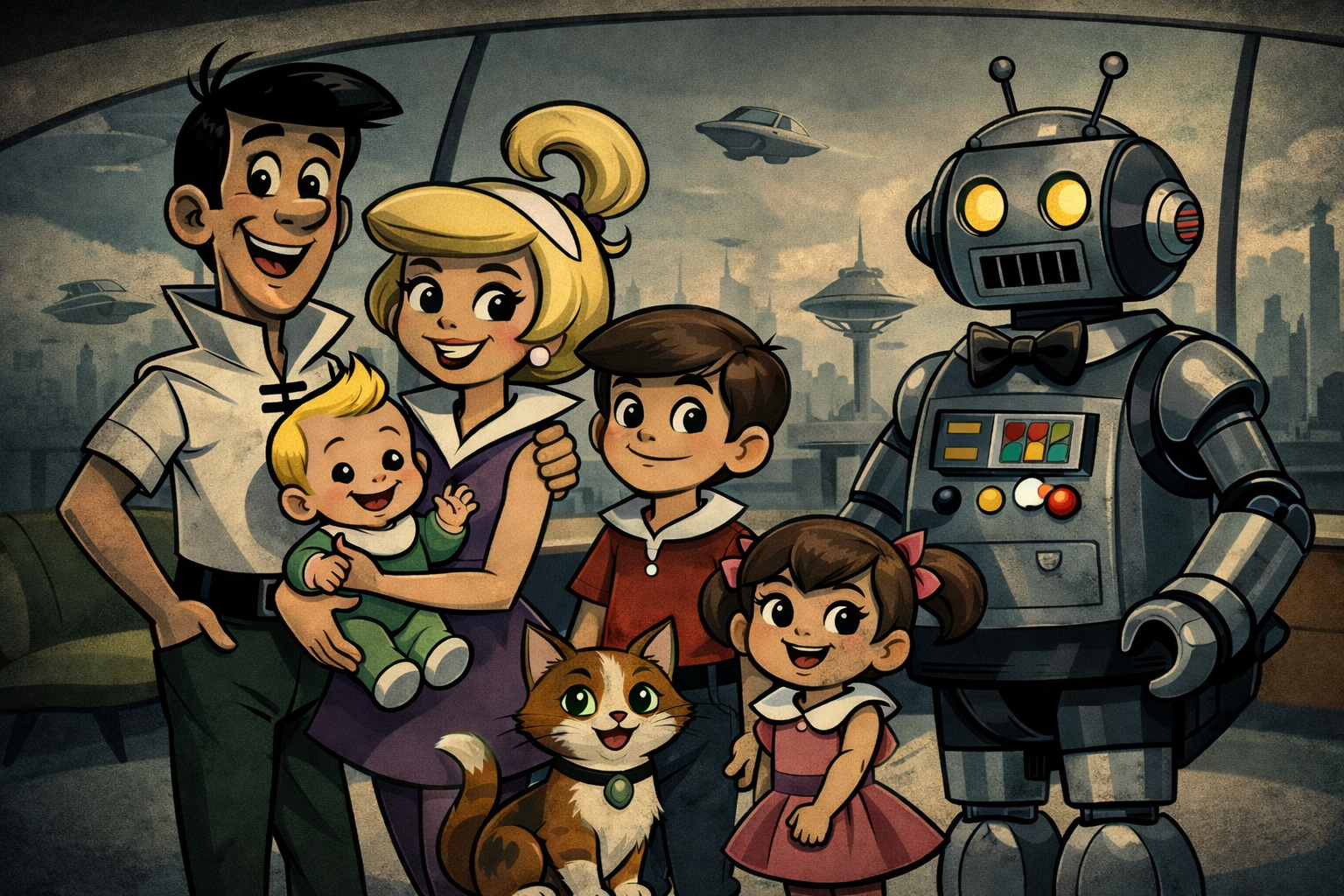

When we look at the discourse surrounding AI, it quickly becomes apparent that there are two camps. The true believers who think algorithms can replace artists, therapists, and your best friend, and the doomsday crowd who swear every chatbot is a step toward the robot apocalypse. Both are exhausting.

Somewhere in between is a saner, middle path. A path to what I call the Authentic Individual.

It’s not about resisting AI or worshiping it. It’s about finding the space where technology supports human creativity, instead of swallowing it whole. The next evolution of AI adoption isn’t about speed or efficiency; it’s about realness. Authentic images. Authentic music. Authentic connections.

Because in an age when everything can be faked, we still want to know what’s real.

The False Binary

AI panic thrives on extremes. Every conversation swings between “machines are taking over” and “AI will set us free.” The truth, as usual, lives in the messy middle.

For every headline promising that AI will replace half the workforce, there’s another insisting it will unlock our highest potential. The narrative flips daily because we’re hardwired for drama. Humans don’t do nuance well. We want heroes or villains, not collaborators.

The result is a constant identity crisis: Are we the masters or the obsolete? The innovators or the endangered species? It’s easier to pick a side than to admit we’re already living in the gray area, one where algorithms finish our sentences, edit our photos, and answer our emails, but still need us to decide what matters.

Stanford’s Human-Centered AI Institute calls this middle path “human-in-the-loop” design, where systems keep people in charge of the tools, not the other way around. McKinsey calls it “agentic organizations,” where humans and digital assistants tag-team the work. Both ideas reject the fantasy of total automation in favor of collaboration, a kind of professional duet where efficiency meets empathy.

We already see it in motion: designers using AI to brainstorm faster, journalists using it to check facts (sometimes fabricated by AI, instead of found), doctors using it to analyze scans while still holding the patient’s hand. The pattern is clear: AI isn’t eliminating human roles; it’s reshaping them.

Call it whatever you want, the point is the same. The smartest companies are using AI to handle the boring routine stuff so humans can focus on work that requires judgment, empathy, and creativity. The best work depends on people and machines collaborating, not competing.

That’s the future I see, not human versus machine, but human with machine. The goal isn’t to make AI “more human.” It’s to make humans more effective, without forgetting what makes us human in the first place.

AI “Artistry”

Artistry has always been emotional territory, so of course it’s become part of the AI battleground. We see some content creators embracing AI created-images as a way to curb hours scrolling through stock images looking for just the right one, and others railing against “AI slop,” refusing to engage with images they claim are ripping off real artists and illustrators.

In the music industry, we saw the AI-generated clones of Drake and The Weeknd, then the flood of machine-made tracks clogging streaming platforms. The industry’s response has been swift and surprisingly unified. Spotify deleted 75 million spam songs, banned unapproved voice cloning, and began labeling AI-assisted tracks. YouTube Music gave artists new takedown rights for AI mimicry and now requires creators to disclose synthetic content. These moves don’t reject AI; they regulate it. They’re trying to draw a line between imitation and intent.

But let’s be clear: AI is not an “artist” no matter how many times the media refers to “AI music artists.” It doesn’t get stage fright or writer’s block. It doesn’t crave applause or write about experiences. It generates patterns based on what we feed it, not passion based on what it feels.

The same manufactured “authenticity” is showing up on screen. Actor George Clooney warned that the rise of AI in entertainment is “dangerous,” saying “the genie is out of the bottle.” Deepfake technology can already copy a performer’s face or voice with uncanny precision. Now, we don’t even need a real person to copy.

The debut of Tilly Norwood, a synthetic character, made headlines for being eerily lifelike. As The Guardian put it, “She’s not art. She’s data.” Tilly Norwood isn’t acting; she’s executing. Every gesture, smile, and line delivery is an output, evidence of computation, not creation. She doesn’t bring experience to a role; she is the role, assembled from datasets built to imitate the emotional labor of real artists.

That’s the strange new tension of the creative world: we’re no longer just stealing likenesses; we’re building artificial ones from scratch. AI doesn’t need your face or your voice to mimic emotion, it can synthesize its own. The question is whether audiences will care that no one is actually behind the performance.

That’s why the push for authentic performance isn’t about banning technology, it’s about protecting the connection between artistry and humanity. When AI tools are used transparently, they can amplify creativity. When they’re prompted to simulate humanity, they hollow it out.

Authenticity doesn’t mean going analog or unplugged; it means knowing where the art came from. It means remembering that creativity isn’t a formula to be optimized; it’s a story to be lived and told, by someone who exists outside the code.

Authentic Interaction

Here’s where it gets personal.

You can feel the fatigue online. People who reject AI aren’t rejecting it because it exists; they’re rejecting it because it’s everywhere. Whether they like it or not. Every feed, every search, every message feels just a little bit artificial. We’re tired of talking to scripts that pretend to be people, and of companies insisting that frictionless means better.

We’ve entered an era where you can’t always tell if the voice, face, or message on your screen is real. Deepfakes, cloned voices, and digital influencers have blurred the line between communication and simulation. What used to be conversation now feels like performance.

Platforms are trying to catch up with the backlash and authenticity is quickly becoming the ultimate status symbol. Everyone wants proof that what they’re seeing, hearing, or buying has a verifiable pulse. The response? A new tech stack designed for transparency.

YouTube now requires creators to disclose when they use AI to generate realistic people or scenes. New “likeness detection” tools flag unauthorized copies before they spread. C2PA and Adobe’s Content Credentials let creators attach digital “proof of origin” to content, showing what’s human, what’s synthetic, and what’s been altered. Nikon’s latest cameras embed that authenticity data directly into each image. TikTok and Meta are auto-labeling AI-generated uploads. Google’s SynthID quietly watermarks AI-made text, images, and video.

We’ve basically created a nutrition label for digital content. Ingredients: 70% human, 30% machine, and trace amounts of delusion.

It’s not anti-AI, it’s pro-truth. Because when everything can be auto-generated, honesty is the new novelty.

The most forward-thinking brands already get it: automate what’s efficient, humanize what’s essential. AI works best when it supports communication, not when it replaces it. Machines can help us respond faster, but they can’t listen. They can optimize tone, but they can’t replicate sincerity. The companies that will thrive aren’t the ones that implement AI for the sake of seeming “bleeding-edge,” they’re the ones that still sound like someone’s home on the other side of the screen.

Proving Authenticity with Imperfection

At the center of all this is you. Me. Us.

For years, we’ve handed our choices to algorithms, letting feeds tell us what to like, who to follow, and what to think. But AI is forcing a reckoning. It’s making us decide what we value when we can have infinite versions of everything.

And here’s the twist: authenticity is also becoming performative. People deliberately misspell words and add typos so their writing doesn’t “sound like AI.” Others rewrite perfectly fine sentences just to avoid that suspiciously polished “machine voice.” Some even add filler words and random phrasing so their posts feel “more human.”

We’ve reached a cultural moment where imperfection itself is branding. Flaws have become proof of life.

It’s absurd and kind of poetic, humans mimicking the imperfections that machines can’t fake. In a strange way, that’s the heart of Authentic Individual. Not rejecting the tools, but reclaiming the quirks that make us unmistakably human.

Studies from KPMG show that transparency boosts engagement and brand trust. Consumers respond better to content that tells them what’s real, what’s generated, and what’s been touched by human hands. The irony? We don’t always mind AI as long as it admits it’s AI (as long as the results aren’t a “sloppy eyesore”).

Being an Authentic Individual in the AI age means using the tools without erasing your voice. Keeping your creative fingerprint visible, even when the algorithm lends a hand. The goal isn’t to sound perfect, it’s to sound like you.

Because the future of AI won’t belong to the loudest or the fastest. It’ll belong to the most authentic.